Database Answers

IT Services

Database Answers

IT Services

Who We Are

Changing The Way

Who We Are

Changing The Way

Our Services

Digital Security

Consultation Team

Google's Jpegli Revolutionizes Image Compression Quality

Google's Jpegli represents a significant leap forward in image compression technology, promising a 35% enhancement in compression ratios without compromising quality. This innovation leverages advanced algorithms to optimize coding efficiency, ensuring seamless interoperability between encoders and decoders. With the ability to minimize banding artifacts and support higher bit depths for improved dynamic range and color fidelity, Jpegli offers substantial bandwidth and storage savings. As we explore the various facets of Jpegli, from its technical prowess to its potential impact on digital media, it becomes clear that this development could redefine industry standards.

Key Takeaways

- Jpegli enhances image quality by reducing compression artifacts and pixelation.

- It offers a 35% improvement in compression ratios without sacrificing image fidelity.

- The technology supports 10+ bits per component for superior color accuracy.

- Jpegli is fully interoperable with existing JPEG standards, ensuring wide compatibility.

- It maintains high coding speed while optimizing image compression efficiency.

Jpegli Features

Leveraging advanced algorithms, Jpegli introduces significant improvements in image compression while maintaining high backward compatibility with existing JPEG standards. This innovative JPEG coding library delivers a remarkable 35% enhancement in compression ratios.

By optimizing coding efficiency, Jpegli guarantees that images are compressed more effectively without compromising visual quality. The fully interoperable encoder and decoder adhere strictly to the original JPEG specifications, guaranteeing seamless integration into current workflows.

Additionally, Jpegli's design focuses on speed and efficiency, offering faster processing times while retaining the visual improvements necessary for high-quality images. These advancements make Jpegli an indispensable tool for those seeking superior image compression solutions without sacrificing backward compatibility or performance.

Image Quality Improvement

Jpegli enhances image quality by performing more precise computations, resulting in clearer images with fewer observable artifacts. This innovative approach addresses common issues such as banding artifacts, particularly in slow gradients, thereby delivering a smoother visual experience.

By supporting 10+ bits per component, Jpegli notably improves dynamic range and color fidelity. In addition, it compresses images more efficiently, optimizing storage and bandwidth without sacrificing quality. This results in a noticeable reduction of compression artifacts, making images appear more natural and less pixelated.

Jpegli's ability to produce clearer images while maintaining high compatibility with existing JPEG standards marks a substantial advancement in image compression technology, promising a richer visual experience for users.

Performance and Integration

How does Jpegli manage to integrate seamlessly into existing workflows while maintaining high performance?

Jpegli achieves this by ensuring its coding speed remains comparable to traditional JPEG approaches, enabling smooth workflow integration without demanding additional resources. This efficiency stems from its advanced adaptive quantization heuristics, which allow for dense compression without compromising speed or memory usage.

Additionally, Jpegli's design prioritizes backward compatibility, ensuring that it can be adopted without requiring significant changes to existing systems. By offering a balance of high performance and seamless integration, Jpegli stands out as an innovative tool that enhances image compression quality while fitting effortlessly into current technological ecosystems, paving the way for more efficient and visually pleasing digital experiences.

Future Potential

As Jpegli seamlessly integrates into existing workflows while maintaining high performance, its future potential to revolutionize image compression technology becomes increasingly evident.

The improved compression techniques of Jpegli promise not only significant bandwidth and storage savings but also unparalleled image quality. By leveraging an advanced colorspace, Jpegli guarantees richer, more accurate color representation, thereby enhancing the visual experience. Its capability to achieve higher image fidelity with fewer observable artifacts positions it as a formidable tool in digital imaging.

Additionally, Jpegli's adherence to backward compatibility guarantees that its adoption will be smooth and widespread, paving the way for a new standard in image compression. This innovation could fundamentally reshape how media is stored, shared, and experienced globally.

JavaScript Data Storage Revolutionizes Front-End Efficiency

In the domain of modern web development, JavaScript data storage solutions such as Web storage, IndexedDB, and the Service Worker Cache API have greatly transformed front-end efficiency. These advanced tools enable developers to manage data more effectively, improve offline capabilities, and exercise meticulous control over resource loading. As a result, web applications can achieve optimized performance and enhanced user experience. However, the implications of these technologies extend beyond mere performance gains, prompting a deeper exploration into the balance between data persistence and security, and how these considerations shape the future of web development.

Key Takeaways

- JavaScript data storage options, like localStorage and sessionStorage, simplify state management and enhance front-end performance.

- IndexedDB supports complex data interactions and boosts efficiency with an object-oriented approach.

- WebAssembly innovations significantly improve data processing speed and efficiency in web applications.

- Service Worker Cache API provides powerful offline capabilities and custom cache strategies to optimize resource loading.

- Security considerations in data storage methods ensure robust and efficient front-end performance.

JavaScript Data Storage Options

In the domain of front-end development, JavaScript offers a diverse array of data storage options, each tailored to different persistence needs and application scenarios.

Web storage, encompassing localStorage and sessionStorage, provides convenient in-browser solutions but necessitates thorough security considerations to protect sensitive data.

IndexedDB stands out for its sophisticated, object-oriented approach, enabling more complex data interactions within the browser.

Innovations such as WebAssembly hold significant potential for enhancing performance and efficiency in persistent storage tasks.

Service Worker Cache API

While JavaScript data storage options offer robust methods for handling persistent data, the Service Worker Cache API introduces a powerful mechanism for managing offline capabilities and optimizing resource loading in web applications.

This API allows developers to implement custom cache strategies, ensuring that critical resources are readily available even when the network is unreliable. By leveraging a cache-first strategy, applications can deliver faster load times and enhanced user experiences.

The Service Worker Cache API not only supports offline optimization but also provides fine-grained control over request and response objects, enabling tailored caching solutions. This innovation empowers developers to create highly responsive, user-focused web applications that maintain functionality regardless of connectivity challenges.

Considerations for Data Persistence

Choosing the most appropriate data persistence method is essential for ensuring both the efficiency and security of web applications. With a focus on security considerations, developers must evaluate the risks associated with different storage solutions, such as potential vulnerabilities in cookies and web storage APIs.

Performance enhancements are also important; methods like IndexedDB offer robust, high-performance storage, while server-side storage via fetch() POST requests can guarantee data longevity and integrity. The emergence of WebAssembly further promises significant performance boosts in persistent storage.

Opting for the simplest yet most secure and efficient solution aligns with clean code principles, facilitating responsive design and user-focused experiences. Balancing these factors is critical for innovative and effective web development.

Local LLM Setup and AI Integration

Running a large language model (LLM) locally can greatly enhance development speed and provide greater flexibility. Leveraging Docker containers or virtual environments for local development allows seamless AI collaboration, fostering innovative solutions.

Implementing AI integration directly into the coding environment not only boosts productivity but also guarantees higher code quality and accurate error detection. This setup empowers developers to train and validate their AI models efficiently, resulting in a more responsive design process.

React 19 Unveils Dynamic Transition Innovations

React 19's latest release ushers in a series of dynamic evolution innovations, promising significant shifts in how developers build and optimize applications. With the advent of async functions, React Server Components, and an enhanced state management system, the framework aims to simplify asynchronous operations and boost rendering performance. These features highlight React 19's dedication to advancing developer tools and improving application responsiveness. As we explore the key beta features, major improvements, and notable enhancements, one can't help but anticipate the profound impact these innovations will have on the development landscape.

Key Takeaways

- React 19 beta introduces async functions for handling pending states, errors, forms, and optimistic updates.

- Integration of `useOptimistic` allows for seamless and efficient optimistic updates.

- New `React.useActionState` hook simplifies managing common asynchronous action cases.

- Enhanced error handling mechanisms improve robustness and error reporting in applications.

- Server Components features with `use` API optimize resource reading during rendering.

Key Beta Features

React 19 beta introduces a suite of advanced features, prominently including support for async functions in progressions, which streamline the handling of pending states, errors, forms, and optimistic updates.

Async functions enhance the developer experience by allowing smooth state changes during asynchronous operations. The integration of `useOptimistic` provides a robust framework for managing optimistic updates, reducing latency and improving user interactions.

Additionally, the introduction of `React.useActionState` simplifies common action cases by encapsulating state management logic. These enhancements collectively elevate the efficiency of React applications, enabling developers to build more responsive and resilient user interfaces.

This convergence of features marks a significant leap forward in React's capability to handle complex asynchronous workflows with precision and ease.

Major Improvements

Implementing notable enhancements, the latest version introduces React Server Components features, providing developers with a new API called `use` to read resources during rendering. This advancement greatly optimizes change performance, allowing for more efficient state management.

Additionally, React 19 facilitates asynchronous state changes, enhancing the speed and fluidity of UI updates. The introduction of `useOptimistic` empowers developers to handle optimistic updates seamlessly, ensuring a smoother user experience.

The improved error reporting mechanisms for hydration errors in `react-dom` further bolster robustness. These features collectively position React 19 as a pivotal upgrade, driving innovation in how developers manage state and modifications, ultimately leading to more responsive and resilient applications.

Notable Enhancements

Building on these major improvements, the notable enhancements in React 19 include advanced error handling for both caught and uncaught errors, support for rendering document metadata tags directly within components, and the ability to return cleanup functions from `ref` callbacks. These enhancements pave the way for dynamic effects and significant UI advancements.

Enhanced error handling fosters robust application stability, while metadata tag support streamlines SEO and accessibility improvements directly within React components. The return of cleanup functions from `ref` callbacks enhances memory management and resource allocation, essential for performance optimization.

Collectively, these updates underscore React 19's commitment to elevating developer experience and application efficiency, empowering developers to deliver more dynamic, high-performing user interfaces.

Release Details

The React 19 beta, announced on April 25, introduces a detailed upgrade guide to facilitate smooth changes for developers adopting the latest features. The release timeline includes a phased approach to guarantee stability and incremental adoption.

Key upgrade considerations emphasize the integration of async functions in changes and the use of `useOptimistic` for managing optimistic updates. Developers are advised to familiarize themselves with new hooks such as `React.useActionState` and the updated error handling mechanisms to mitigate potential disruptions.

Libraries leveraging Server Components can now target React 19, enhancing compatibility and performance. This meticulous planning aims to streamline the adjustment process, ensuring developers can harness the innovative capabilities of React 19 efficiently.

Microsoft Challenges App Store Giants With Web Launch

Microsoft's recent web-based launch represents a significant pivot in the app distribution landscape, challenging the entrenched dominance of Apple and Google's app stores. By circumventing the traditional app marketplaces, Microsoft seeks to provide global accessibility and a more developer-friendly environment. The initial focus on renowned titles like Candy Crush and Minecraft hints at a broader strategy poised to attract third-party developers. This shift not only has the potential to disrupt established norms but also raises questions about the future of app distribution and the evolving role of cloud gaming in this new paradigm. Could this be the beginning of a major industry transformation?

Key Takeaways

- Microsoft launches a web-based app store accessible globally on Android and iOS devices.

- The web store circumvents traditional app store regulations by Apple and Google.

- Initial offerings include popular titles like Candy Crush and Minecraft.

- The platform emphasizes cloud gaming for a seamless, high-quality experience.

- Future expansion plans include third-party partnerships to broaden content.

Microsoft's Web-Based App Store

Microsoft's upcoming web-based app store marks a strategic departure from traditional app distribution models dominated by Apple and Google. This innovative approach promises global accessibility, making the platform available on both Android and iOS devices.

By opting for a web-based format, Microsoft circumvents the stringent regulations often imposed by native app marketplaces. This move not only democratizes app access but also paves the way for third-party expansion in the future.

The web-based store can potentially attract developers who seek broader reach without the limitations of traditional app store policies. Microsoft's strategy underscores its commitment to fostering an inclusive, expansive ecosystem, aligning with the broader trend towards seamless, cross-platform user experiences in the digital age.

Initial Content and Offerings

Initially, the web-based mobile app store will feature popular titles such as Candy Crush and Minecraft, focusing heavily on Microsoft's first-party offerings. This strategic emphasis not only capitalizes on the strength of Microsoft's first-party portfolio but also guarantees a robust launch with globally accessible, high-demand content.

Plans for third-party partnerships are slated for future expansion, indicating a clear path for the store's growth beyond its initial offerings. The web-based approach enhances global accessibility, especially for iOS users who face regional app distribution constraints.

This initial content strategy positions Microsoft to rapidly iterate and expand, setting the stage for a competitive alternative to established app store giants.

Innovative Deployment Strategy

Leveraging a web-based deployment method, Microsoft is strategically positioning its new mobile app store to circumvent traditional app store constraints imposed by Apple and Google. This innovative approach not only sidesteps regulatory limitations but also enhances global accessibility by making the store available on both Android and iOS devices.

Central to this strategy is the integration of cloud gaming, which allows users to play high-quality games directly through the web without the need for extensive downloads or device-specific applications. By focusing on universal web access, Microsoft aims to provide a seamless and inclusive gaming experience, setting the stage for future expansion and broader third-party support.

This deployment strategy underscores Microsoft's commitment to redefining mobile app distribution.

Industry Impact and Challenges

The launch of Microsoft's web-based mobile app store marks a pivotal shift in the app distribution landscape, challenging the entrenched dominance of Apple and Google. By leveraging a web-based approach, Microsoft circumvents traditional app store restrictions, fostering a cross-platform, gaming-centric experience. This strategy acknowledges the burgeoning potential of cloud gaming, hinting at a future where high-quality games are delivered seamlessly over the internet.

However, the initiative faces significant challenges, including regulatory hurdles and the need to build a robust ecosystem that attracts third-party developers. Future expansion plans are essential for its success, aiming to enrich the store's functionalities and broaden its appeal.

Microsoft's bold move could redefine the landscape, promoting a more diversified and innovative app marketplace.

Metaverse Revolutionizes Work: Enhancing Collaboration and Training

As organizations navigate the evolving landscape of digital transformation, the Metaverse emerges as a pivotal tool in reshaping collaboration and training paradigms. Its immersive 3D environments and cutting-edge virtual and augmented reality technologies enable teams to seamlessly interact across global divides, fostering communication and efficiency. This innovation not only redefines teamwork but also revolutionizes training, providing tailored, interactive simulations that enhance learning and skill development. How does this integration impact company culture and workflow dynamics? The following sections will explore these transformative aspects in greater detail, shedding light on the profound implications for the modern workplace.

Key Takeaways

- Immersive 3D tools and environments enable effective teamwork, transcending geographical boundaries.

- Virtual team building in the Metaverse fosters deeper employee engagement and stronger interpersonal connections.

- Metaverse-based training offers interactive simulations, enhancing practical learning and reducing physical setup costs.

- Real-time VR and AR technologies support seamless virtual meetings and collaboration.

- The Metaverse nurtures inclusive, dynamic work environments, encouraging open communication and innovation.

Understanding the Metaverse

The Metaverse, a concept first introduced by Neal Stephenson in his 1992 novel Snow Crash, represents a shared 3D digital world characterized by scalability, real-time 3D interactions, and data continuity. This virtual domain leverages advanced 3D simulation technologies to create immersive environments where users can interact seamlessly.

Digital connectivity is at the core of the Metaverse, linking disparate virtual spaces into a cohesive, interconnected network. As current technologies evolve, they are increasingly meeting the demands of this expansive digital universe. Challenges such as data privacy and business agreements remain, but the potential for transformative digital experiences is undeniable.

Prominent figures like Satya Nadella and Mark Zuckerberg underscore its imminent mainstream adoption, heralding a new era of digital interaction.

Adapting Work Practices

Shifting work practices to the Metaverse requires a thorough reassessment of existing digital tools and processes. The migration to virtual meetings and remote teamwork necessitates the integration of advanced VR and AR technologies. These immersive solutions are not merely add-ons but pivotal in creating a seamless, collaborative work environment.

Companies must evaluate their current infrastructure, ensuring it supports real-time communication and data continuity. Additionally, reskilling employees becomes essential to harness the full potential of the Metaverse, preventing job displacement and enhancing productivity.

While technical hurdles and privacy concerns persist, the strategic adaptation of work practices offers unparalleled opportunities for innovation and efficiency in the digital workplace.

Enhanced Workplace Collaboration

Enhanced workplace collaboration in the Metaverse leverages advanced 3D tools and immersive environments to foster more effective teamwork and innovation.

By facilitating virtual teamwork, employees can interact in real-time within a shared digital space, transcending geographical boundaries. This immersive setting enhances communication, allowing for more nuanced and dynamic exchanges that are often lost in traditional remote work settings.

The Metaverse enables the creation of virtual meeting rooms, collaborative workspaces, and interactive project management tools, which streamline workflows and boost productivity. This transformation not only improves the quality of collaborative efforts but also accelerates decision-making processes and innovation.

As organizations adapt, the Metaverse stands poised to redefine how teams coalesce and achieve shared objectives.

Evolving Company Culture

In addition to adopting the Metaverse within companies catalyzes a transformation in organizational culture, fostering deeper employee engagement and innovative collaboration.

Virtual team building becomes a cornerstone, enabling geographically dispersed employees to participate in immersive experiences that strengthen interpersonal connections and enhance teamwork.

This digital paradigm shift nurtures a more inclusive and dynamic work environment, breaking down traditional hierarchies and encouraging open communication.

Additionally, immersive experiences in the Metaverse allow for creative problem-solving and brainstorming sessions, driving innovation and agility within the workforce.

Transformative Training Methods

Building on the cultural evolution within organizations, the Metaverse ushers in transformative training methods that redefine how employees acquire and apply skills.

By leveraging immersive simulations, companies can create realistic scenarios that enhance practical learning experiences, allowing employees to engage in interactive learning environments. This shift from traditional training methods facilitates a deeper understanding of complex concepts and improves retention rates.

Interactive learning through the Metaverse makes training more engaging and adaptable, catering to individual learning paces and needs. Additionally, these methods greatly reduce the risks and costs associated with physical training setups, particularly in high-risk industries.

Ultimately, the Metaverse is not just enhancing training but revolutionizing the very fabric of employee development and skill acquisition.

Chatbots: Revolutionising Customer Service and Operations for Australian Businesses

In recent years, Australian businesses have been increasingly turning to chatbot technology to enhance their customer service capabilities and streamline operations. This artificial intelligence-powered solution is transforming the way companies interact with customers and manage internal processes.

The Rise of Chatbots in Australia

Australian businesses across various sectors, from retail to finance, are embracing chatbot technology at a rapid pace. According to a recent study by Telsyte, over 70% of large Australian organisations have already implemented or are planning to implement chatbots within the next 12 months.

Key Drivers:

- Cost-effectiveness

- 24/7 availability

- Improved customer satisfaction

- Operational efficiency

Enhancing Customer Service

1. Round-the-Clock Support

One of the most significant advantages of chatbots is their ability to provide 24/7 customer support. This is particularly beneficial for Australian businesses operating in a global market, where time zones can be a challenge.

2. Instant Responses

Chatbots can provide immediate responses to customer queries, reducing wait times and improving overall customer satisfaction. For instance, major Australian retailer Myer has implemented a chatbot that can handle up to 70% of customer inquiries without human intervention.

3. Personalised Experiences

Advanced chatbots use machine learning algorithms to analyse customer data and provide personalised recommendations. Commonwealth Bank’s chatbot, Ceba, can assist customers with over 200 banking tasks while offering tailored financial advice.

Automating Business Operations

1. Streamlining Internal Processes

Chatbots are not just customer-facing tools; they’re also revolutionising internal operations. Australian businesses are using chatbots to automate various processes, including:

- HR queries

- IT support

- Meeting scheduling

- Expense reporting

2. Data Collection and Analysis

Chatbots serve as valuable data collection tools, gathering insights on customer preferences and behaviour. This data can be analysed to inform business strategies and improve products or services.

3. Lead Generation and Sales

Many Australian businesses are leveraging chatbots to qualify leads and even close sales. For example, real estate chatbots can schedule property viewings and provide virtual tours, streamlining the sales process.

Case Study: Qantas Airways

Qantas, Australia’s flagship carrier, has successfully implemented a chatbot named “Qantas Concierge” on Facebook Messenger. This chatbot assists customers with:

- Flight bookings

- Check-in procedures

- Baggage information

- Frequent flyer queries

Since its implementation, Qantas has reported a 20% reduction in call centre volume and a significant improvement in customer satisfaction scores.

Challenges and Future Outlook

While chatbots offer numerous benefits, Australian businesses face challenges in their implementation:

- Integration with existing systems: Ensuring seamless integration with current CRM and other business systems.

- Language processing: Improving natural language processing to better understand Australian colloquialisms and accents.

- Privacy concerns: Addressing data protection and privacy issues, particularly in light of Australia’s stringent privacy laws.

Despite these challenges, the future of chatbots in Australian business looks promising. As AI technology continues to advance, we can expect to see even more sophisticated chatbots capable of handling complex queries and tasks.

Conclusion

Chatbots are revolutionising the way Australian businesses approach customer service and operational efficiency.

By providing 24/7 support, personalised experiences, and automating internal processes, chatbots are helping companies reduce costs, improve customer satisfaction, and stay competitive in an increasingly digital marketplace.

As this technology continues to evolve, it’s clear that chatbots will play an increasingly crucial role in shaping the future of Australian business.

Google Unveils Cutting-Edge Gemini Tools for Developers

Google's recent introduction of the Gemini tools marks a pivotal advancement for developers, incorporating powerful AI capabilities through the Gemini language model. These tools, which seamlessly integrate with popular frameworks like TensorFlow and PyTorch, are designed to elevate development processes with enhanced natural language processing and image recognition. With the inclusion of advanced iterations such as 1.5 Flash and PaliGemma for multimodal tasks, the Gemini suite promises to transform how developers approach complex challenges. The implications for Android, web, and full-stack development are profound, but what specific innovations lie at the heart of these tools?

Key Takeaways

- Google introduces AI tools powered by the Gemini language model for enhanced development capabilities.

- Gemini tools integrate with frameworks like Keras, TensorFlow, PyTorch, JAX, and RAPIDS cuDF.

- New AI tools include 1.5 Flash iteration and PaliGemma for multimodal tasks.

- Gemini Nano and AICore system services support on-device models in Android development.

- Chrome DevTools and Project IDX provide AI-powered insights and streamlined deployment to Cloud Run.

AI Tools for Developers

In an ambitious move to revolutionize the developer landscape, Google has introduced a suite of sophisticated AI tools powered by its advanced Gemini language model. By leveraging cutting-edge natural language processing and image recognition capabilities, these tools promise to enhance the efficiency and creativity of developers.

The expansion of the Gemini model includes the 1.5 Flash iteration, designed for high-frequency tasks, and PaliGemma, aimed at multimodal vision-language tasks. These innovations are seamlessly integrated with popular frameworks such as Keras, TensorFlow, PyTorch, JAX, and RAPIDS cuDF, enabling developers to build more intuitive, intelligent applications.

Google's strategic enhancements signal a significant leap forward in the field of AI-driven development, providing a robust foundation for future technological advancements.

Android Development Enhancements

Building on the momentum of AI tools for developers, Google's latest advancements in Android development are set to redefine app creation with the integration of Gemini in Android Studio.

The introduction of Gemini Nano and AICore system services enables on-device models, allowing developers to leverage powerful AI capabilities directly within their applications. This integration guarantees seamless performance and enhanced user experiences.

Moreover, the robust support for Kotlin Multiplatform facilitates code sharing across different platforms, streamlining the development process. Enhanced by performance optimizations in Jetpack Compose, developers can now build high-quality, responsive apps more efficiently.

Google's commitment to innovation in Android development underscores its mission to empower developers with cutting-edge tools and technologies.

Web Development Improvements

Google's recent enhancements in web development introduce powerful AI capabilities like Gemini Nano integration in Chrome desktop, revolutionizing on-device performance and user experience.

This integration allows developers to leverage AI directly within the browser, offering substantial performance optimizations.

The Speculation Rules API further enhances on-device capabilities by enabling more streamlined page load times, guaranteeing fluid movement experiences across multi-page applications.

Additionally, the introduction of View Transitions ensures that moving between pages is seamless and visually appealing.

Chrome DevTools now provide AI-powered insights, simplifying the debugging process and enhancing developer productivity.

These advancements not only accelerate development workflows but also elevate the end-user experience by delivering faster, more responsive web applications.

Full-Stack Development Innovations

How will the latest full-stack development innovations from Google transform the landscape of unified development solutions?

Google's Project IDX offers a seamless environment by integrating Chrome DevTools and facilitating streamlined deployment to Cloud Run. This leverages the power of cloud deployment for robust, scalable applications.

Additionally, Flutter 3.22's performance enhancements with Impeller and web compilation support elevate cross-platform capabilities. Firebase's updates, including serverless PostgreSQL connectivity, simplify backend complexities.

Furthermore, Google's introduction of Checks, an AI-driven compliance automation platform, guarantees adherence to regulatory standards with minimal effort. These innovations collectively foster a cohesive, efficient development workflow, promising to revolutionize how developers create and deploy sophisticated, compliant applications across diverse environments.

Google Cloud Unleashes Revolutionary AI Agent Builder

Google Cloud has introduced its groundbreaking Vertex AI Agent Builder, a tool poised to profoundly transform the landscape of AI development. This innovative solution caters to developers across various skill levels, facilitating the creation of advanced AI agents for a multitude of applications. With its no-code console and seamless integration with open-source frameworks such as LlangChain, the tool promises enhanced efficiency and accuracy. Additionally, multilingual support and advanced features like retrieval augmented generation (RAG) and vector search capabilities set this platform apart. What implications does this have for the future of AI-driven business solutions?

Key Takeaways

- Vertex AI Agent Builder empowers developers to create sophisticated AI agents for various applications.

- Offers a no-code console and integrates open-source frameworks like LlangChain.

- Provides multilingual support and utilizes natural language inputs such as English, Chinese, and Spanish.

- Leverages retrieval augmented generation (RAG) and vector search for accurate and customized AI responses.

- Grounds model responses in real-time data from Google Search for dynamically updated outputs.

AI Agent Builder Overview

Vertex AI Agent Builder by Google Cloud is a versatile tool designed to empower developers at all skill levels to create sophisticated AI agents for diverse applications. This robust platform addresses a wide array of use cases, from enhancing customer service through conversational AI to streamlining business operations with intelligent process automation.

The user experience is meticulously crafted to be intuitive, ensuring that even developers with minimal coding expertise can leverage its capabilities through a no-code console. For seasoned experts, the integration of open-source frameworks like LlangChain provides added flexibility.

Key Features

Among the key features of Google Cloud's AI Agent Builder, the ability to utilize natural language inputs such as English, Chinese, and Spanish stands out for its potential to enhance user interaction and accessibility. This multilingual support enables important natural language capabilities essential for conversational commerce applications.

By leveraging retrieval augmented generation (RAG), the AI Agent Builder guarantees accurate and contextually relevant responses, greatly improving data training processes. In addition, the inclusion of vector search allows for custom embeddings-based RAG systems, fostering more precise and efficient information retrieval.

These advanced features collectively empower developers to build robust, intelligent agents that can seamlessly integrate into diverse business environments, driving innovation and operational efficiency.

Enhancing AI Outputs

To further enhance the outputs generated by these advanced AI agents, grounding model responses in real-time data from Google Search guarantees that the information remains relevant and accurate. This approach not only improves efficiency but also greatly enhances performance by ensuring that the AI outputs are dynamically updated and contextually pertinent.

Additionally, the use of data connectors to ingest information from various business applications further enriches the AI agents' responses, providing a more thorough solution. Deploying multiple agents for complex use cases and supporting various large language models (LLMs) allows for tailored, high-performance interactions.

This holistic framework ensures that AI outputs are both precise and robust, meeting the innovative needs of today's dynamic business environments.

Regional and Security Insights

The intersection of regional developments and cybersecurity advancements is pivotal in shaping the future landscape of AI adoption and implementation.

For instance, SAP's accessibility initiatives in the Asia-Pacific (APAC) region are democratizing AI technologies, enabling organizations to leverage advanced tools without extensive technical expertise.

Simultaneously, DBS Bank's foundation in robust data management is setting a benchmark for financial institutions aiming to integrate AI with stringent security protocols.

These regional strides are complemented by global cybersecurity efforts, such as Operation Endgame's success in dismantling botnets, which underscore the importance of secure AI deployment.

GitHub Unveils Cutting-Edge Developer Trends Update

GitHub's latest update on developer trends reveals a fascinating shift in the landscape of software development, driven by the increasing adoption of AI technologies. A particularly noteworthy aspect is the integration of AI-driven tools for project documentation and chat-based generative AI, which is streamlining processes and transforming coding workflows globally. This trend underscores the pivotal role of AI in enhancing both core and supplementary development activities. For a deeper understanding of these trends and to explore the specific insights uncovered for UK developers, as well as the emerging programming languages gaining traction, one must consider the broader implications of these advancements.

Key Takeaways

- Increased AI adoption is transforming code development and enhancing documentation workflows.

- UK developers favor JavaScript, Python, and Shell, collaborating extensively with global peers.

- GitHub data reveals a surge in AI-driven project documentation tools.

- Seasonal events like Hacktoberfest highlight evolving developer behaviors and trends.

- Advent of Code spurs interest in niche programming languages like COBOL and Julia.

Global Developer Activity Trends

GitHub's recent data from Q4 2023 reveals significant insights into global developer activity, highlighting a marked increase in the adoption of AI technologies. This surge is evident in the widespread integration of AI-driven tools, particularly in project documentation trends.

Developers are increasingly leveraging chat-based generative AI to streamline documentation processes, thereby enhancing efficiency and accuracy. The data underscores a paradigm shift where AI adoption is not only transforming code development but also optimizing ancillary tasks like documentation.

This trend suggests a future where AI tools are integral to both core and supplementary development activities, reflecting the industry's commitment to innovation and productivity. This insight is vital for stakeholders aiming to stay ahead in the rapidly evolving tech landscape.

UK Developer Insights

With over 3,595,000 developers and 195,000 organizations active, the UK demonstrates robust engagement on GitHub. The British developer community is particularly dynamic, contributing to over 8.3 million repositories.

UK coding preferences show a strong inclination towards JavaScript, which leads the charts, followed closely by Python and Shell. The UK developers' collaborative efforts extend globally, with significant interactions with peers in the United States, Germany, and France. This vibrant ecosystem underscores the UK's pivotal role in the global development landscape.

Additionally, the British developer community's frequent code uploads, totaling over 5.3 million, highlight their proactive approach to innovation and technology advancement. The UK's coding environment remains an important contributor to GitHub's expansive network.

Innovation Graph Metrics

Building on the strong engagement observed in the UK, the Innovation Graph Metrics offer a thorough analysis of developer activities, capturing trends through metrics like Git pushes and repository creation over a four-year period.

The data reveals a notable increase in AI adoption, driven by the integration of AI tools in coding workflows. An intriguing trend is the documentation impact, greatly enhanced by chat-based generative AI tools, which streamline and enrich project documentation.

Seasonal patterns such as Hacktoberfest provide further insights into developer behavior and engagement. By focusing on relevant data, these metrics enable stakeholders to understand shifts in developer activities and the growing influence of AI, fostering a more innovative and efficient coding environment.

New Programming Language Exploration

Advent of Code has catalyzed a surge in interest for obscure programming languages, offering developers a unique platform to experiment with languages such as COBOL, Julia, ABAP, Elm, Erlang, and Brainf*ck.

This annual coding challenge has become a significant driver for the exploration of niche languages, enabling developers to diversify their skillsets and solve complex problems in innovative ways.

The rise in popularity of these niche languages is reflected in GitHub's latest data, showing increased repository activity and contributions.

Leveraging the Advent of Code challenges, developers are pushing the boundaries of traditional programming paradigms, thereby fostering a culture of continuous learning and technological advancement within the global developer community.

Unveiling Lessons From Record-Breaking DDOS Assault

Amidst the backdrop of an unprecedented DDoS assault, critical lessons emerge that underscore the necessity for robust cybersecurity measures. This incident sheds light on the significance of regularly patching vulnerabilities, implementing proactive strategies, and adopting layered defenses. Moreover, the importance of industry collaboration to share insights and bolster collective security cannot be overstated. As we dissect the attack vectors and response mechanisms utilized during this event, it becomes evident that understanding these elements is crucial for fortifying our defenses. What specific strategies should organizations consider to mitigate such formidable cyber threats?

Key Takeaways

- Layered defenses, like rate limiting and adaptive policies, are essential to mitigate sophisticated DDoS attacks effectively.

- Regularly patching vulnerabilities is critical to prevent security breaches from being exploited during DDoS assaults.

- Proactive cybersecurity measures, including real-time monitoring and behavioral analysis, enable rapid identification and mitigation of threats.

- Industry collaboration and threat intelligence sharing enhance defenses and provide a comprehensive understanding of emerging vulnerabilities.

- Automated systems streamline threat detection and response, ensuring a dynamic and resilient security posture against DDoS attacks.

Patch Vulnerabilities Regularly

Regularly patching vulnerabilities is an essential cybersecurity practice to mitigate the risk of cyber attacks. Unpatched vulnerabilities, like the recent exploitation of the zero-day HTTP/2 Rapid Reset (CVE-2023-44487), can lead to severe security breaches.

Utilizing automated patching solutions and robust vulnerability management frameworks is crucial for addressing known flaws efficiently. However, the inherent patching challenges associated with zero-day exploits necessitate advanced strategies. Zero-day vulnerabilities often require swift action, as traditional patching methods may lag.

Implementing automated patching solutions can streamline this process, but the unpredictability of zero-day threats underscores the need for proactive vulnerability management. Addressing these challenges requires an innovative approach, combining automated tools and strategic oversight to ensure thorough protection against emerging cyber threats.

Proactive Cybersecurity Measures

To complement the patching of vulnerabilities, implementing proactive cybersecurity measures is imperative for identifying and mitigating potential threats before they escalate into full-blown attacks. Security automation plays a critical role, enabling real-time monitoring and rapid response to anomalies.

By leveraging behavioral analysis, organizations can detect unusual patterns in network traffic, flagging potential DDoS activities and other cyber threats early. Integrating these advanced techniques guarantees a dynamic and responsive security posture.

Additionally, automated systems can streamline threat identification, reducing reliance on manual processes and allowing for more efficient allocation of resources. Proactive measures, including automated traffic filtering and continuous monitoring, are essential for maintaining robust cybersecurity defenses in an ever-evolving threat landscape.

Implement Layered Defenses

Implementing layered defenses within an organization's infrastructure is crucial for creating a resilient cybersecurity framework capable of mitigating sophisticated DDoS attacks. A multi-faceted approach, integrating customized protections and adaptive policies, guarantees robust defense mechanisms.

Customized protections include rate limiting, which manages traffic flow, and global load balancing, which distributes incoming requests to prevent overloads. Adaptive policies, tailored to evolving threat landscapes, dynamically adjust based on real-time traffic analysis and behavioral patterns.

Industry Collaboration

Building on the robust framework of layered defenses, collaboration with industry peers plays a pivotal role in enhancing an organization's ability to mitigate sophisticated DDoS attacks.

Collaborative strategies facilitate a thorough exchange of threat intelligence, enabling organizations to preemptively address emerging vulnerabilities. Information sharing amongst stakeholders, including software maintainers and cloud providers, fosters a holistic understanding of threat landscapes.

The concerted efforts of industry giants like Google, Cloudflare, and AWS during recent DDoS incidents exemplify the power of unified defenses. By synchronizing mitigation tactics and sharing real-time data, organizations can deploy adaptive protections more effectively.

In an era of escalating cyber threats, such collaborative efforts are indispensable for fortifying defenses against increasingly complex DDoS assaults.

Which web hosting service to choose for a WordPress site?

WordPress powers over 40% of all websites on the internet, making it the most popular content management system (CMS) worldwide. While creating a WordPress site may seem straightforward at first glance, it requires careful consideration when it comes to hosting.

Selecting the right web hosting service is crucial for your site’s performance, security, and scalability. This comprehensive guide will help you navigate the process of choosing the ideal WordPress hosting solution for your needs.

Understanding WordPress Hosting

WordPress hosting refers to web hosting services specifically optimized for WordPress websites. These hosting solutions are designed to meet the technical requirements of WordPress and often come with additional features to enhance your site’s performance and management.

Key Technical Requirements for WordPress Hosting:

- PHP version 7.4 or greater

- MySQL version 5.7 or greater OR MariaDB version 10.2 or greater

- HTTPS support

- mod_rewrite Apache module (for pretty permalinks)

Types of WordPress Hosting

There are several types of hosting services available for WordPress sites, each with its own advantages and considerations:

1. Shared Hosting

Pros:

- Most affordable option

- Suitable for beginners and small websites

- Easy to set up and manage

Cons:

- Limited resources

- Performance can be affected by other sites on the same server

- Less control over server configuration

2. Virtual Private Server (VPS) Hosting

Pros:

- Dedicated resources

- Better performance than shared hosting

- More control over server configuration

Cons:

- Requires more technical knowledge to manage

- More expensive than shared hosting

3. Dedicated Server Hosting

Pros:

- Full control over server resources and configuration

- Highest level of performance and security

- Ideal for high-traffic websites

Cons:

- Most expensive option

- Requires advanced technical skills to manage

4. Managed WordPress Hosting

Pros:

- Optimized specifically for WordPress

- Automatic updates and backups

- Enhanced security features

- Expert WordPress support

Cons:

- More expensive than general hosting options

- May have limitations on plugin usage

5. Cloud Hosting

Pros:

- Scalable resources

- High reliability and uptime

- Pay-as-you-go pricing model

Cons:

- Can be more complex to set up and manage

- Costs can fluctuate based on usage

Key Factors to Consider When Choosing WordPress Hosting

When selecting a WordPress hosting service, consider the following criteria:

1. Performance and Speed

Look for hosts that offer:

- SSD storage

- Content Delivery Network (CDN) integration

- Caching solutions

- PHP 7+ support

2. Security Features

Prioritize hosts with:

- SSL certificates

- Regular malware scanning

- Automated backups

- Firewall protection

3. Scalability

Ensure your host can accommodate your site’s growth by offering:

- Easy upgrades to higher plans

- Ability to handle traffic spikes

- Seamless resource allocation

4. WordPress-Specific Features

Look for hosts that provide:

- One-click WordPress installation

- Automatic WordPress core updates

- Staging environments for testing

5. Customer Support

Choose a host with:

- 24/7 support availability

- WordPress-specific expertise

- Multiple support channels (live chat, phone, email)

6. Pricing and Value

Consider:

- Initial costs and renewal rates

- Included features in each plan

- Money-back guarantee

Recommended WordPress Hosting Providers

While the best hosting provider depends on your specific needs, here are some reputable options to consider:

- Bluehost – Official WordPress.org recommended host

- SiteGround – Known for excellent performance and support

- WP Engine – Premium managed WordPress hosting

- Kinsta – High-performance managed WordPress hosting

- DigitalOcean – Developer-friendly cloud hosting with WordPress options

Conclusion

Choosing the right web hosting service for your WordPress site is a crucial decision that can significantly impact your site’s success.

By considering factors such as performance, security, scalability, and support, you can select a hosting provider that aligns with your needs and budget. Remember to regularly reassess your hosting needs as your site grows and evolves.

Whether you’re launching a personal blog or a high-traffic e-commerce site, there’s a WordPress hosting solution out there for you. Take the time to research and compare options before making your decision, and don’t hesitate to take advantage of free trials or money-back guarantees to ensure you’ve found the right fit.

Amazon, Google and Microsoft start hybrid cloud war

More than ever, the CIO finds himself with a two-speed information system. It must maintain and evolve its existing IT infrastructure while migrating bricks step by step into the public cloud. This observation has not escaped the attention of cloud giants, who now offer hybrid solutions, building bridges between the old and the new world.

A way to bring new customers back to them, beyond the public cloud conversions. To approach this market, providers have adopted different approaches. “Microsoft Azure and AWS started with IaaS services before gradually expanding their offerings. Google makes the choice of the whole container. This is consistent with its strategy and solutions for a population of developers,” says Damien Rollet, cloud architect and DevOps at Ippon Technologies.

Google Cloud Anthos, the choice of the whole container

Anthos was undoubtedly the most commented new feature of Next’19, the Google Cloud conference held this year in early April. In fact, it is the new name of Google Cloud Services launched a year earlier. As for Azure and AWS, the web giant is offering to embed its technologies in its customers’ data centers. Originality, Anthos opens the way to multicloud by managing workloads executed on third-party clouds. And to speed up the transition, Google Cloud also announced Anthos Migrate, a beta version of a service that automatically migrates virtual machines from a local cloud to a public cloud.

Azure Stack, pioneer award

For once, Amazon Web Services (AWS) was defeated by Microsoft. After about a year and a half of pre-versions, Azure Stack was released in final version in July 2017. It is an extension of Azure that allows a company to run cloud services in an on-premise environment.

Typically, Microsoft started by providing IaaS services to recreate a cloud infrastructure on an internal perimeter with virtual machines, storage resources and a virtual network. The Redmond-based company can rely on its strong presence in data centers through its Hyper-V and Windows Server virtualization solution.

AWS Outposts, the VMware asset

A new service announced in November 2018, Outposts is part of AWS’ strategy to conquer private clouds. Following the partnership with VMware introduced two and a half years ago, Amazon’s subsidiary is taking the hybrid world a step further.

Unlike Microsoft, which has established partnerships with manufacturers, AWS has chosen to offer an infrastructure (including hardware) designed by itself, promising the same level of service as its public cloud. A customer can perform EC2 calculation and EBS storage services on site. In addition to this IaaS layer, AWS plans to add services such as RDS, ECS, EKS, SageMaker and EMR over the coming months.

USA: Tim Cook wants to supervise personal data merchants

Known for his opposition to the excessive collection and processing of personal data, Tim Cook has issued an official statement to the US authorities. Apple’s boss is calling for stronger legislation and guidance for data brokers.

Between generalist positions and clear attacks on competitors (Google), it has sometimes been difficult in the past to know whether Tim Cook was a genuine defender of the right to privacy. However, with his article published this week in Time magazine, Apple’s boss seems to be free of any ambiguity. Without pointing fingers and without referring to any particular case, he explains that everyone should have the right to control their digital life, which is currently not the case in the United States. Addressing the authorities, he, therefore, calls for new legislation that is not unlike the European framework. It also calls on the regulator to put an end to trade without real rules on personal data.

According to Tim Cook, to give American Internet users control over their data, the United States needs Congress to take over the subject to create a federal law. The man refers to four principles that, in his opinion, should guide the drafting of this law. First of all, companies should be forced to do their utmost not to isolate data that could identify their customers, or even not collect such data at all. Secondly, Internet users should have the right to know what data is being collected and for what purpose. These same Internet users should also have a right of access, i.e., the possibility of having data corrected or deleted by companies. Finally, a right to data security should exist.

The need to regulate data resale

But, according to Tim Cook, even with these principles in mind, one law may not be enough. The problem is not limited to the initial collection of data, and Internet users do not always have the tools to follow the progress of the data. Apple’s boss is thinking in particular of brokers specializing in buying and reselling batches of data.

Tim Cook denounces a secondary market lacking control. The requirement for consent should be able to improve the situation, but it must also be possible to ensure that it does so. In this respect, Apple’s boss no longer turns to Congress, but the FTC, the regulator. It suggests that it create a body dedicated to monitoring this market, which would require all data merchants to be registered, with the possibility for Internet users to track their data and assert a right to have them deleted via a simple online request.

Of course, Tim Cook is aware that he is raising a debate involving many interests and that his proposals will not be taken up as they stand. However, he would like to point out that the stakes are high since it is a question of controlling personal data.

How To Choose The Best Web Hosting For WordPress

WordPress powers over 40% of all websites on the internet, making it the most popular content management system by far.

If you’re planning to create a WordPress website, choosing the right web hosting is crucial for your site’s performance, security, and success. This comprehensive guide will walk you through the key factors to consider when selecting a WordPress host.

Understanding WordPress Hosting Options

Before diving into specific factors, it’s important to understand the main types of WordPress hosting available:

- Shared Hosting: The most affordable option where your site shares server resources with other websites. Suitable for small sites with low traffic.

- Virtual Private Server (VPS) Hosting: Provides dedicated resources within a shared environment, offering better performance and scalability than shared hosting.

- Dedicated Server Hosting: Gives you an entire physical server for your website, ideal for large, high-traffic sites requiring maximum control and performance.

- Managed WordPress Hosting: Specialized hosting optimized for WordPress with enhanced security, performance, and support features.

- Cloud Hosting: Utilizes a network of connected servers to provide flexible, scalable hosting resources.

Now let’s explore the critical factors to consider when choosing a WordPress host.

WordPress Compatibility and Optimization

Native WordPress Support

While most web hosts support WordPress, look for providers that offer:

- One-click WordPress installation

- Automated WordPress core updates

- WordPress-specific security features

- Optimized server configurations for WordPress

Managed WordPress Hosting

For serious WordPress users, managed WordPress hosting offers several advantages:

- Servers optimized specifically for WordPress performance

- Enhanced security measures tailored to WordPress vulnerabilities

- Automatic updates for WordPress core, themes, and plugins

- Regular backups and easy restoration options

- Expert WordPress support staff

- Staging environments for testing changes

While managed WordPress hosting typically costs more than standard hosting, the added features and peace of mind are often worth the investment for business-critical websites.

Performance and Reliability

Server Resources

Ensure the hosting plan provides adequate resources for your needs:

- CPU allocation

- RAM

- Storage space (SSD preferred for faster performance)

- Bandwidth/data transfer limits

Uptime Guarantee

Look for hosts that offer at least a 99.9% uptime guarantee. However, be sure to read the fine print and understand how uptime is calculated and what compensation is offered for downtime.

WordPress powers over 40% of all websites on the internet, making it the most popular content management system by far. If you’re planning to create a WordPress website, choosing the right web hosting is crucial for your site’s performance, security, and success.

This comprehensive guide will walk you through the key factors to consider when selecting a WordPress host.

Understanding WordPress Hosting Options

Before diving into specific factors, it’s important to understand the main types of WordPress hosting available:

- Shared Hosting: The most affordable option where your site shares server resources with other websites. Suitable for small sites with low traffic.

- Virtual Private Server (VPS) Hosting: Provides dedicated resources within a shared environment, offering better performance and scalability than shared hosting.

- Dedicated Server Hosting: Gives you an entire physical server for your website, ideal for large, high-traffic sites requiring maximum control and performance.

- Managed WordPress Hosting: Specialized hosting optimized for WordPress with enhanced security, performance, and support features.

- Cloud Hosting: Utilizes a network of connected servers to provide flexible, scalable hosting resources.

Now let’s explore the critical factors to consider when choosing a WordPress host.

WordPress Compatibility and Optimization

Native WordPress Support

While most web hosts support WordPress, look for providers that offer:

- One-click WordPress installation

- Automated WordPress core updates

- WordPress-specific security features

- Optimized server configurations for WordPress

Managed WordPress Hosting

For serious WordPress users, managed WordPress hosting offers several advantages:

- Servers optimized specifically for WordPress performance

- Enhanced security measures tailored to WordPress vulnerabilities

- Automatic updates for WordPress core, themes, and plugins

- Regular backups and easy restoration options

- Expert WordPress support staff

- Staging environments for testing changes

While managed WordPress hosting typically costs more than standard hosting, the added features and peace of mind are often worth the investment for business-critical websites.

Performance and Reliability

Server Resources

Ensure the hosting plan provides adequate resources for your needs:

- CPU allocation

- RAM

- Storage space (SSD preferred for faster performance)

- Bandwidth/data transfer limits

Uptime Guarantee

Look for hosts that offer at least a 99.9% uptime guarantee. However, be sure to read the fine print and understand how uptime is calculated and what compensation is offered for downtime.

Amazon AWS to offer its RDS database service on VMware

At VMworld US, Amazon AWS announced its intention to offer a version of its RDS managed database service for VMware private cloud environments. The objective is to enable the implementation of hybrid architectures by companies, but also eventually the migration to AWS.

Since last year, VMware has been marketing a public cloud service, VMware on AWS, hosted on the cloud giant’s infrastructure. The goal is to give companies a counterpoint to the publisher’s private cloud offer to offer them homogeneous hybrid cloud services. To build this offer, VMware relied on bare machines provided by AWS.

But this year, it was AWS that announced its intention to use VMware technologies to implement its database solution as a Service RDS (Relational Database Service) in an “on premises” version. RDS should thus become AWS’ first genuinely hybrid infrastructure service (the provider already offers an on-premise extension of its public service IoT Greengrass).

AWS RDS: facilitate the deployment and management of databases

Amazon Relational Database Service is Amazon Web Services’ managed SQL database offering. It supports a wide range of database engines including MySQL, MariaDB, PostgreSQL, Oracle, Microsoft SQL Server, and Amazon’s own SQL database, Aurora. RDS ensures automated provisioning of database instances and incorporates sophisticated replication, migration and backup mechanisms.

The technology also makes it possible to organize the replication (synchronous and asynchronous) of databases and can orchestrate the automatic switchover between several availability zones in the event of failure. It controls all RDS functions via the AWS management console, Amazon RDS APIs or the cloud provider’s command line interface.

Speaking on stage at the opening keynote of VMworld, Andy Jassy, Amazon AWS CEO explained that RDS on VMware would offer on VMware on-premise clusters, the same service as RDS on AWS (except for in-house DBMS support, Aurora).

Amazon will propose a pre-version of the service in the coming months. Jassy didn’t specify what the prerequisites will be to make it work, nor did he determine how we would administer the future RDS on VMware. He just indicated that the replication mechanism implemented by AWS in the cloud would have an equivalent on-premise since it will be possible to replicate databases between VMware clusters.

Towards an opening of AWS technologies to on-premises?

The RDS offer on VMware breaks with Amazon’s important policy of offering its technologies only on its public cloud. It could signal the supplier’s desire to deploy its solutions more widely in hybrid mode.

By partnering with AWS to offer hybrid offerings, VMware can provide an alternative to Microsoft’s (with Azure Stack) or Nutanix’s hybrid offerings.

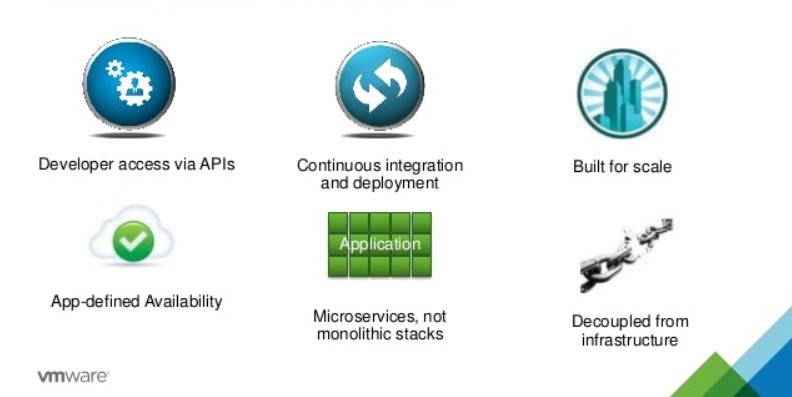

Everything you Need to Know about Cloud-Native Applications

In today’s fast-paced world, with its proliferation of data and devices that create them, this approach is no longer viable. Instead, companies are striving to unlock the value of this data surge and instruct their developers to respond with highly scalable solutions and ever-tighter deadlines.

What is Cloud-Native Computing ?

A new paradigm has emerged, driven by the need for scalability, flexibility, and agility. It is further supported by both the declining cost of cloud computing services and the increasing agility of applications and networks to bridge the performance gap between local and remote computing.

Cloud-native applications run exclusively on cloud-based infrastructure and are designed specifically to take advantage of the cloud’s new features and functionality.

To get to this stage, you must first migrate applications from on-premise infrastructure to a cloud-based infrastructure, using the infrastructure offerings as a service (IaaS) from your cloud computing provider. The first advantage is the elimination of initial investment costs. Of course, the process is not as simple as that, but it can be successfully achieved by replicating the on-site infrastructure using software and hardware components that work together.

For example, you can replicate a 50-node cluster by renting and connecting 50 virtual machines in the cloud and installing the same applications and operating systems that run on site.

While offering the standard benefits of cloud computing (such as replacing capital expenditures with operating expenses, flexible on-demand services, and lower maintenance), this type of configuration provides a stepping stone to a 100% cloud-native configuration. The next step is, therefore, to migrate to an environment where your cloud computing provider’s platform infrastructure as a service (PaaS) abstracts the idea of the server operating system and allows the enterprise to focus only on the applications and services they provide, rather than how they deliver those services.

This is the first point of contact with the “cloud native” concept. It requires a redesign of applications and their interactions but means that business imperatives can guide design, and thus results.

Native cloud computing applications go even further. In a PaaS model, the underlying platform provides pre-configured operating system images that require no patching or maintenance and can be scaled automatically based on application load. A native cloud regime extends the PaaS concept by providing developers with a complete abstraction of the underlying infrastructure via a runtime billing model that automatically adapts to each trigger call.

This implies that applications are broken down into their individual functions (small code blocks), using the appropriate language for that function, be it JavaScript, C#, Python or PHP, or scripting languages such as Bash, Batch, and PowerShell. These functions can then be triggered in various ways, including HTTP so that they can react to various events. These are native cloud computing applications, made up of small components that can be developed, tested and deployed quickly. Some companies deploy dozens of new code fragments every day. Why not yours?

Key benefits of Cloud-Native Applications

Conclusion

The concept of cloud-native applications is still in its infancy. However, there is an important dynamic behind the idea of agile applications that deliver results quickly and promote agility while reducing costs. Given that the leading cloud computing service providers support the concept, thus demonstrating business demand, it would be unwise to ignore it.

Four Reasons to Setup Data Backup Solutions Today

Many business owners procrastinate the data backup process, because they do not want to waste time or resources to setup these backups. But the truth is that every day when you are not setting up a data backup is a potential risk that you are taking.

Every business in the modern economy relies on computers, networks and the data they are saving on their devices. These businesses are not able to survive if they were to lose that data. It does not matter if you have a startup or a company with a presence in three different cities.

Every business in the modern economy relies on computers, networks and the data they are saving on their devices. These businesses are not able to survive if they were to lose that data. It does not matter if you have a startup or a company with a presence in three different cities.

If you were to lose your data without an appropriate backup, it would hurt your company in immeasurable ways. Here are four reasons why it is important to setup data backup solutions.

- Simple Recovery

People make mistakes all the time. Your employees are human beings and they are going to slip up. Say you have many workstations and devices set up across the office. These devices get them connected to the company network, so they are able to work.

Maybe an employee opens up a phishing email and they have a virus on the computer. Without a real time backup, it would take an hour or more before their computer is working. With backups, you just have to restore to the previous saved point. The system is all good, as the files with the virus are wiped from the system automatically.

- Keeping Company Records Intact

There are so many important company records that are now saved on computers. In the past, these documents were printed out and filed into cabinets. Most companies would have photocopies to ensure they were safe if something happened to the original document.

Data backups are the digital version of creating multiple copies. Say you have relevant tax documents saved on your computers. Or maybe it is financial data from the past few years. These are important files that you cannot afford to lose. Without a data backup solution, these files are compromised.

- Getting a Competitive Advantage

What happens when a company faces a setback with their workstations or devices? They have to go to the data backup. But what if there is no data backup? They are losing time every single second they are unable to access a backup.

And that is time a company is not losing if they have a proper backup system. By ensuring that your data is backed up, you will gain a competitive advantage over companies that did not take the time to set up proper backups.

- Protection Against Natural Disasters

A study from 2007 showed that in the United States, around 40 percent of businesses that suffer a major data loss do not reopen. And many of these data losses are not because of some complicated technological issue. They happen because of a physical disaster. Floods, storms, earthquakes and hurricanes are just some of the natural disasters that can hurt a business.

They could hurt your business too. But if you have cloud backups of your data, you will be up and running within days. Without those backups, you could be out of commission for months – or permanently.

Benefits of Server Based Networks for Small Businesses

Every business has their own way of operating. But as a general rule, small businesses that are not using server based networks are putting themselves at an unnecessary disadvantage.

Connecting computers in a peer to peer manner is fine when we are talking about two or three computers. If you have a startup or a solo operation, with one or two extra people helping you, a server based network is not necessary.

But if you have 5 or more employees who are working together, it does not make sense to continue with P2P communications. A server based network is the way to go. And here are a few reasons why it is the best option:

- Servers Bring Added Reliability

The very foundation of a successful business is reliability. In the past, it meant a different type of reliability. Now we are more reliant on technology than ever before. A reliable network is vital to your operations.

Say you are operating P2P. It means that every PC on the network is crucial to the entire network staying active. If one computer goes down, your whole network is compromised. That means downtime, which costs you money.

Servers work differently. The hardware is created in a way to protect against redundancies. Even if one device fails, the entire system will continue running. And the device can be repaired while the system is still active.

- Servers Bring Better Security

When a P2P network is in operation, maintaining security tiers is very difficult. In most cases, you will have two tiers. You have the administrator who has complete access and you have the users who have limited access.

With a server, you can set up many different categories of users. In fact, every single account can have its own set of permissions. It ensures that you will have no unnecessary access to vital company data.

- Servers Bring Better Remote Accessibility

Even the most basic and inexpensive servers allow for remote access. A Windows 2008 server allowed for two remote users to access the network. Modern servers allow for much more flexibility when it comes to remote access. With a P2P network, such remote access is not possible.

Having employees work remotely is a major part of how modern businesses are run. It is even more crucial for a startup, as you may have employees who are working other jobs at the same time. Now they can do work for your company while they are at another location.

- Servers Bring Proper Virus Management

It is so easy to manage antivirus and anti-malware software on servers. The main PC that is controlling the server can take care of the installation of these antivirus and anti-malware programs. They can also be updated through the main computer.

If you were running a P2P network, you would have to manually take care of the antivirus programs on each device.

Yes, a P2P network is still relevant. It is useful when you have a solo operation or a business with only two or three employees. But the moment you have five or more employees, it makes sense to go with a server based network.

info@databaseanswers.com

Lemont, IL 60439

Weekends: by appointment

info@databaseanswers.com

Lemont, IL 60439

Weekends: by appointment